tl;dr — I had very disturbing diplacusis (double hearing) during a really bad bout of influenza, but recovered after a month.

The Diplacusis Diary

Being a Tonmeister, and loving music all my life, I didn’t understand what drove some people, even those in my family, to dislike violins. Where I enjoyed beautiful, warm, expressive singing tone, they heard “tuneless cats wailing” or worse.

Whereas the main complainant among my relatives didn’t seem to mind piano music too much, orchestras and violins in particular were, to her, the equivalent of a knife edge being dragged squealing across a china plate.

How could there be such a difference?

Until last month, I had no idea. But now I know.

For three weeks, my right ear has presented me with hideously detuned ghost orchestras, squawking organ pipes, shrieking violins and cracked bells. Music encoded using codecs such as MP3 or AAC sounded like it was being played through loudspeakers whose cones had been torn apart, and any perception of stereo was lost: everything was shifted about 40° to the left, while demonic pitchless musicians wailed over my right shoulder. In short, all pleasure in music was replaced by agony, and my work as a performing musician, occasional record producer and film editor appeared finished.

This is an essay on the ailment diplacusis, and my journey to safety through it. To be more accurate, my particular case was diplacusis dysharmonica, where pitch is perceived normally in one ear, but wrongly in the other. This article is no substitute for a professional diagnosis and a course of therapy from a medical specialist, but it is published to show how a musician and amateur physicist (me) worked through the nightmare, and was healed by the brain and body’s own resources.

Yes, I’m better now and, indeed, most people recover without intervention. But, if you have begun a similar journey, please get checked by the best professional you can find because many different causes lead to the same ailment. Most triggers that the body can’t fix on its own can be cured by pharmaceutical or surgical intervention. Please don’t hesitate.

Where did it start?

I have normal hearing for a 51-year-old, gracefully growing older. There’s a little high-frequency tinnitus but nothing to worry about. Then, in May 2015 began my worst bout of influenza ever. This brought about the kind of coughing and congestion that kills older people.

While blowing my nose rather fiercely, I felt and heard something nasty, probably mucus, shoot up my right Eustachian tube and into my middle ear. Or perhaps too much pressure was used and something inside my middle ear became damaged?

Immediately, I felt a sense of pressure as if my ear needed to ‘pop’ and, as usual, there was a dullness of hearing. This is perfectly normal when the pressures either side of the tympanum are unequal. But also, there was a new acoustic effect, as if my eardrum were in direct physical contact with my throat. Breathing and swallowing became much louder than usual in this ear alone. And popping my ears to relieve pressure changed none of this.

So, in the matter of a very short space of time, I had an ear that felt completely full of something, and that would not respond to the normal procedures. The next day, I was checked by a doctor who wanted me to visit the audiology department at the hospital if things weren’t getting better. The tympanum is translucent, and an expert can diagnose much by shining bright light onto it.

What did I notice?

Day three dawned. Outside my house, off to the right from where I sit for my everyday work, there is a church. The bell, which was being tolled to call the congregation for the morning service, had developed a problem. It sounded as if it been cracked, which was a pity because its sound was normally very pleasant, a reminder that this is a historic and pretty town. Later that day, there was space in the diary to visit the vicar to tell him about the sad accident that had happened in his bell tower in case he’d not noticed.

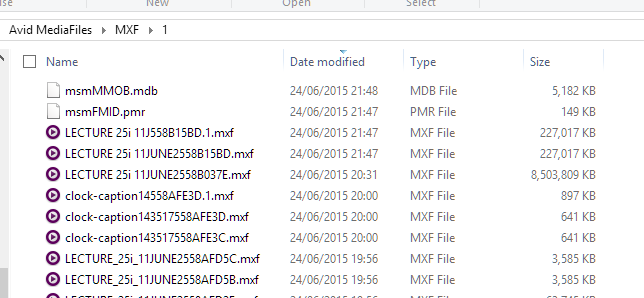

Then it was time to edit and master some music for a client. Despite the feeling of pressure in the right ear, sensitivity had returned so I fearlessly began work.

The first piece of music wasn’t from the usual excellent producer whose work normally went into this particular project and the difference certainly showed! The whole choir was way off to the left in the stereo soundstage, and the MP4 audio file sounded terribly distorted, as if encoded at a very low bitrate. The right hand channel, particularly, had incredible harmonic distortion and countless intermodulation products. I very nearly fired off a cheery email to my friend who usually provides this material, saying “it’s easy to tell this isn’t from you!”

Then I glanced at the meters and the waveform. The audio was in dual-channel mono. In other words, both audio streams were identical and panned dead centre. What on EARTH was I hearing? Were my speakers or amplifier blown?

Into a separately amplified output, my headphones were plugged. The sound was just as awful. But then the real horror began: turning the cans the other way around, the balance and wild distortion inside my head were identical, as if I’d not reversed the headphones at all.

So I checked just the left channel: and it was perfect. But with the right channel alone, not only was the sound like someone singing through a comb and paper, it was nearly a semitone sharp! The vocal timbre also sounded sped up, like a tape being played through a pitch shifter.

A first response

This was deeply unpleasant. “I’m broken!” was the first thought. After a lifetime of playing and loving music, and wondering why my mother didn’t like musical sounds at all, suddenly all my own pleasure in music was lost. The glory of stereo, “sound sculpted in space”, had gone. I could no longer tell if an instrument or singer was in tune. And judgement on matters of tonal balance was impossible.

Every day in the press, we read about people whose lives have been utterly ruined by accidents. Losing part of one ear is hardly equivalent to being crippled and confined to a wheelchair for ever. And if a person suddenly disabled can find a way through, it wouldn’t be too much trouble for me with one-and-a-half ears and all my limbs still working.

A bit sad for a musician and producer, though — the end of my lifetime’s ambition.

That afternoon, I played piano for a rehearsal. The whole echo of the church appeared routed through a pitch-shifter and screamed mockingly at me like a choir in the worst kind of horror movie.

Analysis

So, that evening, there was time to analyse what was happening.

Speech? All sibilants on the left, and sounding sped-up in the right ear alone.

Sine waves? Fine up to about 2kHz, then bad intermodulation distortion when feed to both ears: and pitch shift above 2kHz in the right ear alone.

Playing the piano? Everything an octave above Middle C and higher was surrounded by a vile cluster of discordant tones.

What about fun with heavily-panned Beatles’ songs, where the vocals or an instrument are fully on one stereo channel or the other? The trumpet solo in “Penny Lane” was unlistenable in part, though the brain did a good job of pulling some of it back into pitch on its lower notes. Over this, I had no conscious control: it was rather like watching a remotely controlled machine at work.

The Nat ‘King’ Cole album “Welcome To The Club” has the vocals bizarrely panned entirely on one channel. You can see where I’m going with this! And, yes, he was singing a semitone sharp. So was my enjoyment of music and my professional judgement over for life?

Over the week that followed, experiments continued. Every morning I’d be woken by the church clock chiming with all its harmonics in the wrong pitch (though the fundamental tone was fine), then I’d try the piano: there were clusters of evil upper partials on every note, and harmonies brought no pleasure or contrast. And recorded music encoded with perceptual codecs still sounded as if played through a class B amplifier with terrible crossover distortion.

Thinking in Physics

What might have been happening inside my ear? The feeling of pressure was still there, and everything above about 1.5kHz was pitch-shifted up.

If the workings of the ear are unknown to you, I suggest that, at this point, you take a look at some Wikipedia entries particularly regarding the tympanum, the ossicles, the cochlea and the organ of Corti. Remember how standing waves are set up along the basilar membrane, turning it into a spectrum analyser.

If you have access to a tone generator, try this: feed 2kHz or 3kHz into headphones, then clench your jaw strongly. Did you hear the pitch of the tone go up? Is the pressure on your ear affecting the bone holding your cochlea and therefore changing its shape, altering the places along the basilar membrane where different frequencies resonate, thereby fooling the brain into perceiving a different pitch?

Maybe something, maybe mucus, was putting pressure constantly on my cochlea, possibly on its oval window, permanently changing the places where resonance occurs when frequencies are higher than about 1.5kHz? This is in line with the place theory of pitch perception.

And perhaps the audio that is normally heavily modified by the MP3 or AAC algorithms, disguised by the normal ear’s processes, is revealed in all its distortion by my suddenly revelatory but damaged cochlea? In other words, the spectral lines that these codecs decide to distort, lost in the ear’s usual perception, are shown in all their awfulness now that they are shifted for the benefit of my aural education.

How to fix my ear?

So at this point, about two weeks before writing this essay, I resolved to get through this in several ways.

- Using commonly available open source software, I could have found where the frequency break in my damaged ear was, and design a process that maps frequencies above this frequency to slightly lower frequencies, thus restoring normal pitch perception for headphone use. Perhaps even a digital hearing-aid like this is possible?

- Middle ear infections cause pressure in the middle ear, so I was ready to do all that is possible to detect and clear any infection.

- I still had influenza and was very congested: so it would have been useful to keep using Olbas Oil and pseudoephedrine to clear any other sinus and Eustachian tube blockages.

- Retrain my brain regarding pitch. After all, as a baby, only after birth could the already-formed brain have been able to compare pitch sensations generated by the two ears and, somehow, co-relate them — so why not try to restart the process?

The strong upper harmonics in violins and pipe organs howled violently in my right ear: and, if my family member who hated such instruments also had unresolved diplacusis, perhaps this was the reason for her dislike of such sounds?

Cured

Now, the good news, for me at least. My ear has become decongested in the last week, and the shrill demonic orchestra and choir has faded to almost nothing. My stereo hearing is now back to its normal clean status, and music is a constant pleasure. I didn’t need to make my own hearing-aid, the decongestants seemed to work, and my self-training with tones and careful music listening perhaps helped too.

Sometimes, diplacusis can be healed in this way by the body and brain’s own natural functions. This has taken about a month for me.

If you have just experienced the very disturbing onset of diplacusis, maybe this essay has given you hope? But please get to a hearing specialist as soon as you can, in case your situation is different from mine, and you need surgical intervention.

And never blow your nose too hard.